theme: phrenology

theme: phrenology

Automated Inference on Criminality using Face Images

2016

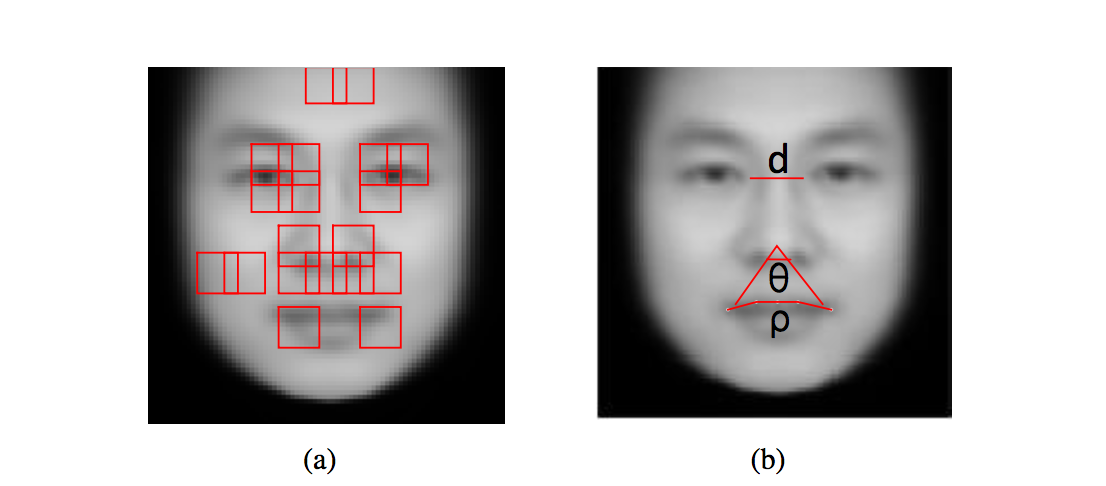

Figure 4. (a) FGM results; (b) Three discriminative features ρ, d and θ. ['the curvature of upper lip denoted by ρ; the distance between two eye inner corners denoted by d; and the angle enclosed by rays from the nose tip to the two corners of the mouth denoted by θ']

(Wu and Zhang, 2016)

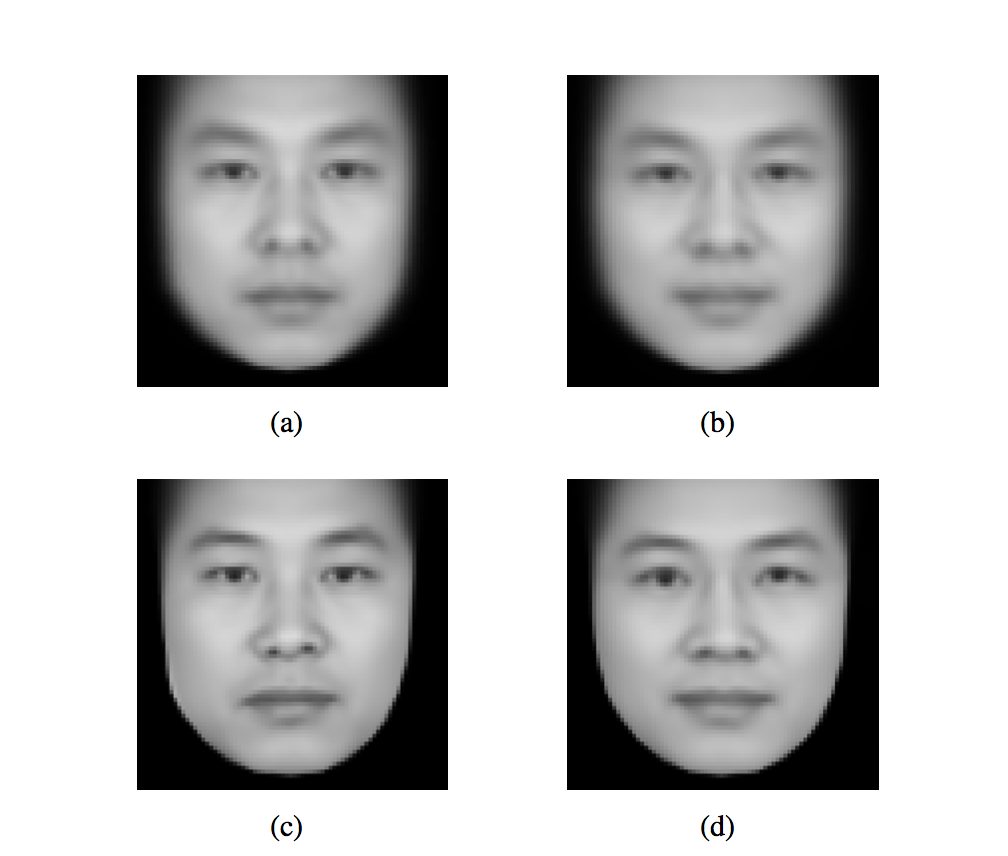

Figure 6. (a) and (b) are 'average' faces for criminals and non-criminals generated by averaging of eigenface representations ; (c) and (d) are 'average' faces for criminals and non-criminals generated by averaging of landmark points and image warping.

(Wu and Zhang, 2016)The study contains virtually no discussion of why there is a “historical controversy” over this kind of analysis — namely, that it was debunked hundreds of years ago. Rather, the authors trot out another discredited argument to support their main claims:, that computers can’t be racist, because they’re computers.

(Biddle, 2016)Absent, too, is any discussion of the incredible potential for abuse of this software by law enforcement. Kate Crawford, an AI researcher with Microsoft Research New York, MIT, and NYU, told The Intercept, “I‘d call this paper literal phrenology, it’s just using modern tools of supervised machine learning instead of calipers. It’s dangerous pseudoscience.”

(Biddle, 2016)theme: phrenology

theme: phrenology